During my time as a visiting researcher at the University van Amsterdam I had the opportunity to interview Richard Rogers, professor of New Media and Digital Culture. He calls himself a ÔÇťWeb epistemologistÔÇŁ, and since 2007 heÔÇÖs director of the Digital Methods Initiative, a contribution to doing research into the ÔÇťnatively digitalÔÇŁ. Working with web data, they strive to repurpose dominant devices for social research enquiries. HeÔÇÖs also author of the ÔÇťDigital MethodsÔÇŁ book. With him I tried to explore the concept of Digital Methods, and the relationship with design.

Richard Rogers at Internet Festival 2013, photo by Andrea Scarf├▓

LetÔÇÖs begin with your main activity, the Digital Methods. What are they, and what are their aims?

The aims of Digital Methods are to learn from and repurpose devices for social and cultural research. It’s important to mention that the term itself is meant to be a counterpoint to another term, “Virtual Methods”. This distinction is elaborated in a small book, “The end of the virtual“.

In this text I tried to make a distinction between “Virtual Methods” and “Digital Methods”, whereby with Virtual Methods, what one is doing is translating existing social science methods — surveys, questionnaires et cetera — and migrating them onto the web. Digital Methods is in some sense the study of methods of the medium, that thrive in the medium.

With virtual methods you’re adjusting in minor but crucial detail existing social science methods whereas digital methods are methods that are written to work online. That is why the term native is used. They run native online.

Are virtual methods still used?

Yes, and virtual methods and digital methods could become conflated in the near future and used interchangeably for the distinction IÔÇÖm making between two is not necessarily widely shared.

In the UK there is a research program called digital methods as mainstream methodology which tries to move the term outside of a niche. Virtual methods, on the other hand, has been more established, with a large Sage publication edited by Christine Hine.

Digital Methods, the book, was awarded the ÔÇť2014 Outstanding Book of the YearÔÇŁ by the International Communication Association, which gives it recognition by the field, so now the argument could be in wider circulation.

Today, many websites use the term Digital Methods. I was curious to know if you were the first one using it or not.

Yes, the term originated here, at least for the study of the web. The term itself already existed but I havenÔÇÖt created a lineage or really looked into it deeply. I coined or re-coined it in 2007.

If you look at digitalmethods.net you can find the original wiki entry which situates digital methods as the study of digital culture that does not lean on the notion of remediation, or merely redoing online what already exists in other media.

How do digital methods work?

There is a process, a procedure that is not so much unlike making a piece of software. Digital Methods really borrows from web software applications and web cultural practices. What you do is create a kind of inventory, or stock-taking, of the digital objects that are available to you: links, tags, Wikipedia edits, timestamps, likes, shares.

You first see what’s available to you, and then you look at how dominant devices use those objects. What is Google doing with hyperlinks? What is Facebook doing with likes? And then you seek to repurpose these methods for social research.

You’re redoing online methods for different purposes to those intended. This is the general Digital Methods protocol.

What is your background, and how did you get the idea of Digital Methods?

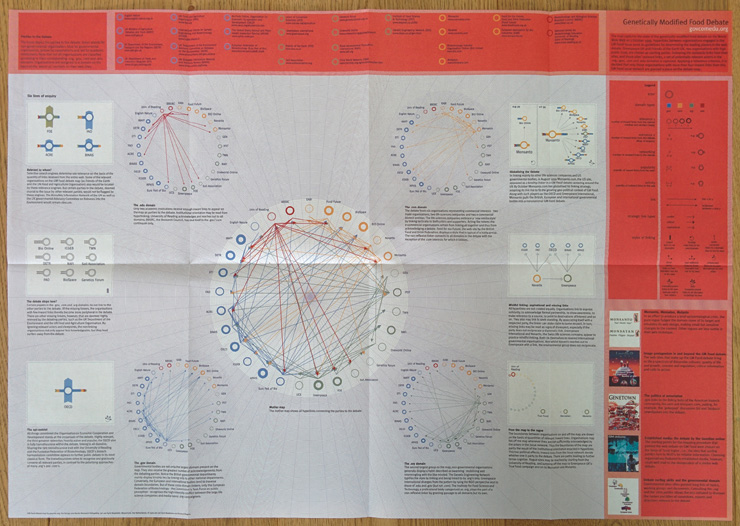

My background is originally in political science and international relations. But most of the work behind Digital Methods comes from a later period and that is from the late ’90s, early 2000s when we founded the govcom.org foundation. With it, we made the Issue Crawler and other tools, and a graphical visual language too for issue mapping.

ThatÔÇÖs combined in the book, ÔÇťPreferred placementÔÇŁ, and it includes the first issue map that we made: a map of the GM food debate. You can see link maps and a kind of a visual language that begins to describe what we referred to at the time as the “politics of associations” in linking.

It began with a group of people working in the media and design fellowship at the Jan Van Eyck Academy in Maastricht, but it also came out of some previous work that I had done at the Royal college of Art, in computer related design.

Those early works were based on manual work as well. Our very first map was on a blackboard with colored chalk, manually mapping out links between websites. There’s a picture somewhere of that very first map.

So, you created the first map without any software?

Yes. And then we made the Issue Crawler, which was first called the “De-pluralizing engine”.

It was a commentary on the web as a debate space, back in the ÔÇś90s when the web was young. New, pluralistic politics were projected onto the web, but with the De-pluralizing Engine we wanted to show hierarchies where some websites received more links than others.

Issue Crawler first come online in a sort of vanilla version in 2001 and the designed version in 2004. The work comes from my “science and technologies studies” background and in part scientometrics, and citation analysis.

That area, in some sense, informed the study of links. In citation analysis you study which article references other articles. Similarly, with link analysis you’re studying which other websites are linked to.

Nanotechnology policy map, made with Issue Crawler

Reading your book (Digital Methods), sometimes the research is on the medium and some others studies are through it.

Indeed, Digital Methods do both kinds of research, it is true. ThereÔÇÖs research into online culture and culture via online data. It’s often the case that we try to do one or the other, but mainly we simultaneously do both.

With Digital Methods one of the key points is that you cannot take the content out of the medium, and merely analyze the content. You have to analyze the medium together with the content. It’s crucial to realize that there are medium effects, when striving to do any kind of social and cultural research project with web data.

You need to know what a device artifact is, which search results are ‘Google artifacts,’ for example. We would like to undertake research, as the industry term would call it, with organic results, so as to study societal dynamics. But there’s nothing organic about engine results.

And the question is, how do you deal with it? How explicit do you make it? So we try to make it explicit.

I think the goal has always been trying to do social research with web data, but indeed we do both and we also strive to discuss when a project is aligning with one type of research or to the other.

On the web, the medium is changing very quickly. Does this affect your research? Is it a problem?

Well it’s something we addressed from the beginning, because one of the prescripts of Digital Methods, one of the slogans, has been to follow the medium, and the reason is that the medium changes. You cannot expect doing sort of standard longitudinal research.

You do not receive the same output out of Facebook nowadays that you had three years ago, or five years ago. The output changed. You can go back in time and harvest Facebook data from five years ago, but Facebook was in many respects a different platform. There was no like button. Similarly, you need to know something about when Google performed a major algorithm update, in order to be able to compare engine results over time.

We are working with what some people call “unstable media”. We embrace that and of course there have been times when our research projects become interrupted or affected by changes in the advanced search, for example in a project created by govcom.org, called “elFriendo”. It is an interesting piece of software where you can use MySpace to do a number of things: create a new profile from scratch, check the compatibility of interests and users, and do a profile makeover.

And this worked very well until MySpace eliminated an advance search feature. You can no longer search for other users with an interest. So that project ended but nevertheless it remains a conceptual contribution, which we refer to as an approach to the study social media called post-demographics. This means that you study profiles and interests as opposed to people’s ego or social networks. This project opened up a particular digital methods approach to social media.

Presenting diagrams made by DMI or based on your methods, sometimes I encounter skepticism. Most of the statements are: you cannot prove that web represents society / when looking at people, you cannot define which portion of the population you are following / when crawling websites, you donÔÇÖt know what kind of information is missing. Do you receive critiques on DM reliability? How do you answer these?

There is lot of scepticism toward research that has to do with online culture.

Normally itÔÇÖs thought that if you’re studying the web you’re studying online culture, but we are trying to do more than that.

A second criticism or concern is that online data is messy, unstructured, incomplete, and it doesn’t really meet the characteristics of good data.

And then the third critique is that even if you make findings with online data you need to ground these findings in the offline, to make them stronger. Working with online data, Digital Methods necessarily needs to be part of a mixed methods approach. This is the larger critique.

How do I answer to these critiques? Well I agree with the spirit of them, but the question that I would like to pose in return is: how do we do Internet research?

One could argue that what you sketched out as critiques apply more to Virtual Methods than to Digital Methods. Because the various expectations to be met, those are the expectations that Virtual Methods are trying to deal with; while Digital Methods is a rather different approach from the start.

We use the web in a kind of opportunistic manner for research. Given what’s there, what can we do? That’s the starting point of Digital Methods.

The starting point is not how do we make a statistical sample of parties to a public debate online. That would be a Virtual Methods concern.

One common word used today is Digital Humanities. Are Digital Methods part of it?

To me, Digital Humanities largely work with digitized materials, while Digital Methods work with natively digital data. And Digital Humanities often use standard computational methods, while Digital Methods may come from computational methods but are written for the web and digital culture.

So the difference between Digital Methods and Digital Humanities is that the latter work with digitized material using standard computational methods.

What’s the difference in using a digitized archive (e.g. digitized letters from 1700) and an archive of born-digital data?

If you work with the web, archiving is different, in the sense that the web is no longer live yet is digital, or what Niels Bruegger calls re-born digital.

So web archives are peculiar in that sense. We could talk more specifically about individual web archives.

LetÔÇÖs talk about the Wayback Machine and the Internet Archive, for example, which I wrote about in the ÔÇťDigital MethodsÔÇŁ book. It was built in 1996 and reflects its time period, in that it has a kind of surfing mentality built into it as opposed to searching.

It’s also a web-native archive, and is quite different from the national libraries web archives: they take the web and put it offline. If you want to explore them, you have to go to the library; theyÔÇÖve been turned into a sort of institutionalized archive, one in the realm of the library and librarians.

So it is a very different project from the Internet Archive. You can tell that one is far webbier than the other, right?

Another widely used word is big data. Sometimes it is used as synonym for web data. Is it something related to what you do or not?

As you know, IÔÇÖm one of the editors of the “Big Data & Society” journal, so IÔÇÖm familiar with the discourse.

Digital methods are not necessarily born in that; they are an approach to social research with web data, so the question is, what’s the size of that web data? Can digital methods handle it?

Increasingly we have to face larger amounts of data. How would one start to think the work is big data? Is it when you need clusters and cloud services? I think when you reach those two thresholds you’re in the realm of big data and we’re nearly there.

The final chapter of my book deals with this, and I think it is important to consider what kind of analysis one does with big data.

Generally speaking, big data call for pattern seeking, so you have a particular type of analytical paradigm, which then precludes a lot of other interpretative ones which are finer grained and close-reading.

Digital Methods are neither only distant reading nor close reading, but can be either. So Digital Methods do not preclude the opportunities associated with big data but they certainly are not dealing exclusively with big data ones.

You created a considerable amount of tools. Some of them are meant to collect data, others contain a visual layer, and some other ones are meant for visualization. How much importance do you give the visual layer in your research? How do you use it?

Our flagship tool, the Issue Crawler, and a lot of subsequent Digital Methods tools, did a number of things. The idea from the beginning was that the tool would ideally collect, analyze and visualize data. Each tool would have a specific method, and a specific narrative, for the output.

The purpose of digital methods tools would not be generic, rather would be specific or in fact, situated, for a particular research. Most of the tools come from actual research projects: tools are made in order to perform a particular piece of research, and not to do research in general. We don’t build tools without a specific purpose.

Analysing links in a network, navigating Amazon's recommendation networks, scraping Google: here you can find all the tools you need.

The second answer is that designers have always been important; the work that I mentioned comes from a sort of confluence on one hand on science studies and citation analysis, and on the other hand computer-related design.

I was teaching in science studies at the University of Amsterdam and in computer related design at the Royal College of Art, specifically on mapping, and a number of projects resulted from my course, for example theyrule.net.

My research always had a political attitude as well: with analytical techniques and design techniques we’re mapping social issues.

And we map social issues not only for the academia, our audience also has been issue professionals, people working in issue areas, and in need of maps, graphical artifacts to show and tell before their various issue publics and issue audiences. We’ve always been in the issue communication business as well.

For which public are the visualizations you produce meant?

We have a series of publics: academics, issue professionals, issue advocates, activists, journalists, broadly speaking, and artists. It isnÔÇÖt necessarily a corporate audience.

Each of those audiences of course has very different cultures, communication styles and needs.

So we try to make tools that are quite straightforward and simple, with simple input and simple output. That’s really the case for the Lippmannian device, also known as Google Scraper, where there are few input fields and you get a single output.

ItÔÇÖs also important for us to try to reduce the threshold in the use. For the Issue Crawler there are 9000 registered users. Obviously they don’t use it all the time.

Generally speaking the tools are open to use, and that’s also part of the design.

In the last summer schools you invited some former DensityDesign students.

Were you already used to invite designers?

Yes, govcom.org as well as DMI has always been a collaboration, maybe I should have mentioned this from the beginning, between analysts, programmers, and designers. And then sometimes there is more of one than another, but we always created a communication culture where the disciplines can talk each other.

Often times the problem, when working in an interdisciplinary project, is that people don’t speak each otherÔÇÖs language. What we’re trying to do is to create a culture where you learn to speak the otherÔÇÖs language. So if you encounter a programmer and say ‘this software is not working’, he would probably ask you to ‘define not working’.

Similarly you won’t go to a designer and just talk about colors, you need a more holistic understanding of design.

It is a research space where the various kinds of professions learn to talk about each otherÔÇÖs practice. ItÔÇÖs something that people in Digital Methods are encouraged to embrace. That’s has always been the culture here.

DensityDesign students during the 2012 summer school: "Reality mining, and the limits of digital methods". Photo by Anne Helmond

You’ve lots of contacts with design universities. Why did you invite designers from DensityDesign?

Well, because Milan students are already trained in Digital Methods, and I didn’t know that until someone showed me the work by some of you in Milan, using our tools, doing something that we also do, but differently.

What we found so rewarding in Milan is the emphasis on visualizing the research process and the research protocols.

If you look at some of our earlier work, it’s precisely something we would do (for example in ÔÇťLeaky Content: An Approach to Show Blocked Content on Unblocked Sites in Pakistan – The Baloch CaseÔÇŁ (2006)). It is an example of Internet censorship research.

And from the research question you show step by step how you do this particular piece of work, to find out if websites telling a different version of events from the official one are all blocked. So when I saw that DensityDesign was largely doing what we have always naturally done but didnÔÇÖt really spell it out in design, I thought it was a great fit.

Is there design literature on Digital Methods?

Our work is included, for example, in Manuel Lima visual complexity, and earlier than that our work has been taken up in Janet AbramÔÇÖs book on Elsewhere: Mapping. She’s also a curator and design thinker I worked with previously at the Netherlands Design Institute, which no longer exists; it was a think-and-do-thank run by John Thackara, a leading design thinker.

In some sense the work that we’ve done has been a part of the design landscape for quite some time, but more peripherally. We can say that our work is not cited in the design discourse, but is occasionally included.

IDEO, a famous design firm, published a job opportunity called ÔÇťDesign Researcher, Digital MethodsÔÇŁ. This is an example of how Digital Methods are becoming relevant for design. Is their definition coherent with your idea?

No, but that’s ok. It coheres with a new MA program in Milan, which grew out of digital online ethnography.

Digital Methods in Amsterdam has had little to do with online ethnography, so this idea of online ethnography doesn’t really match with Digital Methods here, but does match with DM done there and elsewhere. Online ethnography comes more from this (showing Virtual Methods book).

IDEOÔÇÖs job description is not fully incompatible but it’s more a collection of online work that in fact digital agencies do. This particular job description would be for people to build tools, expertises and capacities that will be sold to digital agencies. So these are core competencies for working with online materials.

Is it surprising for you that this job offer uses the term ‘Digital Methods’?

The first thing you learn in science studies is that terms are often appropriated.

How I mean Digital Methods isn’t necessarily how other people would use it, and this appropriation is something that should be welcomed, because when people look up what Digital Methods are, where they came from, when they discover this particular school of thought, hopefully they’ll get something out of the groundwork we’ve done here.

We worked together during the EMAPS project. How do you evaluate DensityDesign approach?

I think that DensityDesign contribution to EMAPS has been spectacular.

Generally speaking I don’t have criticisms of the DensityDesign contribution, but I have questions and they have to do maybe more generally with design thinking and design research.

Often times design think from the format first. Often design starts with a project brief, and with it there is already a choice for a format of the output, because you need to have constraints, otherwise people could do anything, and comparison would be difficult. It’s indeed the element of the project brief that we do differently. So maybe it’s worth to document the differences.

The Digital Methods approach, in terms of working practice, relies on the current analytical needs of subjects matter experts, whereby those needs in some sense drive the project.

Working with web data, the standard questions that we ask a subject matter expert is ÔÇťwhat’s the state of the art of your field? What are you analytical needs? And what do you think Internet could add?ÔÇŁ

We let the subject matter expert guide our initial activities, provide the constraints. That’s the brief. Which is a different way of working from the idea that, for example, this project output will be a video.

Another comment I would add is the more Digital Methods have become attuned to the field of information visualization, the more DM has learnt from this field, the more standardized the visualizations have become. Whereas in the past we were wild, and we made things that did not necessarily fit the standards.

One of the questions that have been asked in a project IÔÇÖm working on is: ÔÇťare good big data visualization possible?ÔÇŁ But similarly one could ask: ÔÇťin data visualization, is innovation possible?ÔÇŁ Because what we’re currently seeing is increasing standardization.

So then, what is innovation in data visualization? These are the questions I would pose across the board.

Because when designers are working with project partners, I think they learn more about the analysis than about data visualization.

So is imagination now driven by data analysis? The challenge is to think about processes or setups which make possible innovation.

[…] original post:┬áhttps://densitydesign.org/2014/05/an-interview-with-richard-rogers-repurposing-the-web-for-social… […]

May 26th, 2015 at 8:04 am